A while ago I bought a cheap keyboard at a car boot sale with the idea of turning it into a midi keyboard to be used with music making software. However, when I got it home I started using it I decided that it might be good to keep its original functionality intact. So, I started thinking about a way to add midi functionality without breaking what was already in place. But then I had another idea. Instead of modifying the keyboard, would it be possible to write a small program that could detect the note being played and send a midi signal to the operating system, and thus the music software I want to control, based on when a key is pressed and released. This is how I got there.

How this works

There are a lot of better explanations of what I’m about to write here elsewhere on the internet. However I still want to give a short description of how this works. Any given waveform can be expressed as the sum of a set of sine waves of different amplitudes. Translating a waveform from its raw state to a function of frequency of the component sine waves to their amplitudes it called a fourier transform.

If you have a sine wave and you put it through a fourier transform it will be 0 for every frequency except the frequency of the sine wave. If you have a waveform made up of two sine waves of different frequencies the resulting fourier transform will be zero for every value except those two frequencies. So presuming that we have an instrument that produces something close to pure sine waves it should be possible to determine what notes it is playing at a given time by performing a fourier transform on the waveform it is producing. In fact if multiple notes are playing it should be possible to determine when they turn on and off independent of each other.

Computers read in sound by taking samples of the current wave from the microphone very rapidly (44100 times a second, or 44.1khz). We can use this to build a waveform and then perform a fourier transform on it. In this case, since we have a set of values and not a continuous function, I am doing a discrete fourier transform. To be precise the program will use an algorithm called the Fast Fourier Transform, or FFT, that performs some tricks that speed up the process a lot.

Setting up the keyboard

Since the keyboard I’m using, a Yamaha PSS-11, has multiple modes that replicated different instruments I needed to pick a mode that would be most suitable for what I am doing. There were three criteria I used to select the instrument. First, I needed an instrument that would remain playing as long as I held the key down on the keyboard. Something like a piano would not work because even if you hold the key down the sound will stop after a time and the program would not be able to tell the difference between this and the key simply being released. Second I needed something that produced a relatively clean sine wave so that pressing one note would not trigger other notes. And thirdly I needed the instrument whose lowest note had the highest frequency. This was because the lower the frequency (and therefore, higher the wavelength) the longer a sample you need to take in order to be able to capture one full wave. After a bit of experimentation I found that the best instrument for this on my particular keyboard was number 29, the panflute.

Building the program

The first step in the process was to figure out how to get a stream of samples to be analysed. To do this I used the Simple DirectMedia Layer library, or SDL. SDL a cross platform equivalent to DirectX, expanding on openGL to also give low level access to HID and audio devices in a platform agnostic way. The API for reading audio samples is straightforward, and it requires that a callback be provided to handle the samples. This function is where the FFT needs to be run, but initially I just had it output the sum of all the individual samples so I could use this to test that the sound was coming through to the program properly.

Once the samples were coming through I needed to find a way to perform the FFT on them. I could have done this the long way and written my own FFT, but there are a lot of libraries out there that do it, so I decided to use one of those. I tried a couple of different ones, but in the end the one I found that worked best was fftw. Once I had this I added some more logging to display the frequency in the FFT that had the highest amplitude. This was to be used later to map notes on the keyboard to frequency position in the FFT, but I’ll get to that in a bit.

Next I had all of this I built and algorithm that would look through a list of note number, frequency (represented as a slot number in the FFT) and amplitude for a given frequency. The idea is that if the amplitude for a given frequency is over a certain number then it means that the note is currently being pressed, if not its not being pressed. I could then use this to send MIDI key down and MIDI key up events. I stuffed this list with some default values to get going and then I moved onto the next part.

Perfecting the note detection

Once I had the program up and running and basically doing what I needed it to do in terms of processing the audio I needed to map the values of the frequencies in the FFT to notes. There are ways to calculate this, but I did it the most simple way: use the program I had already written to measure it for me. I simply went through each note and noted the frequency that had the highest amplitude and what that amplitude was for a given note, and then moved onto the next one.

At least that was the idea. The first issue I found was that the lowest notes on the keyboard were triggering the same position in the fourier transform. This was because I was only taking 2048 samples to make the waveform. Now, the maximum frequency that a DFT can recognise is called the nyquist frequency and it is ½ the sampling rate. So, in order to increase the resolution of a dft at the high end you need to increase the sample rate. However, in order to increase the resolution. For example, if you take 4 samples at 16hz and perform a DFT, the slots will represent 0hz, 2hz, 6hz, 8hz. However, if you take 8 samples you will get a DFT that contains 0hz, 1hz, 2hz, 3hz, 4hz, 5hz, 6hz, 7hz and 8hz. The resolution could also have been increased by increasing the sample rate but this would have had two problems. Firstly, I would have had to have increased the number of samples anyway, so that I still had a set of samples that covered enough time to capture the lowest frequency, and secondly there were only a few sample rates to choose from on account of the abilities of my sound card, and I was already using the highest one available (96khz).

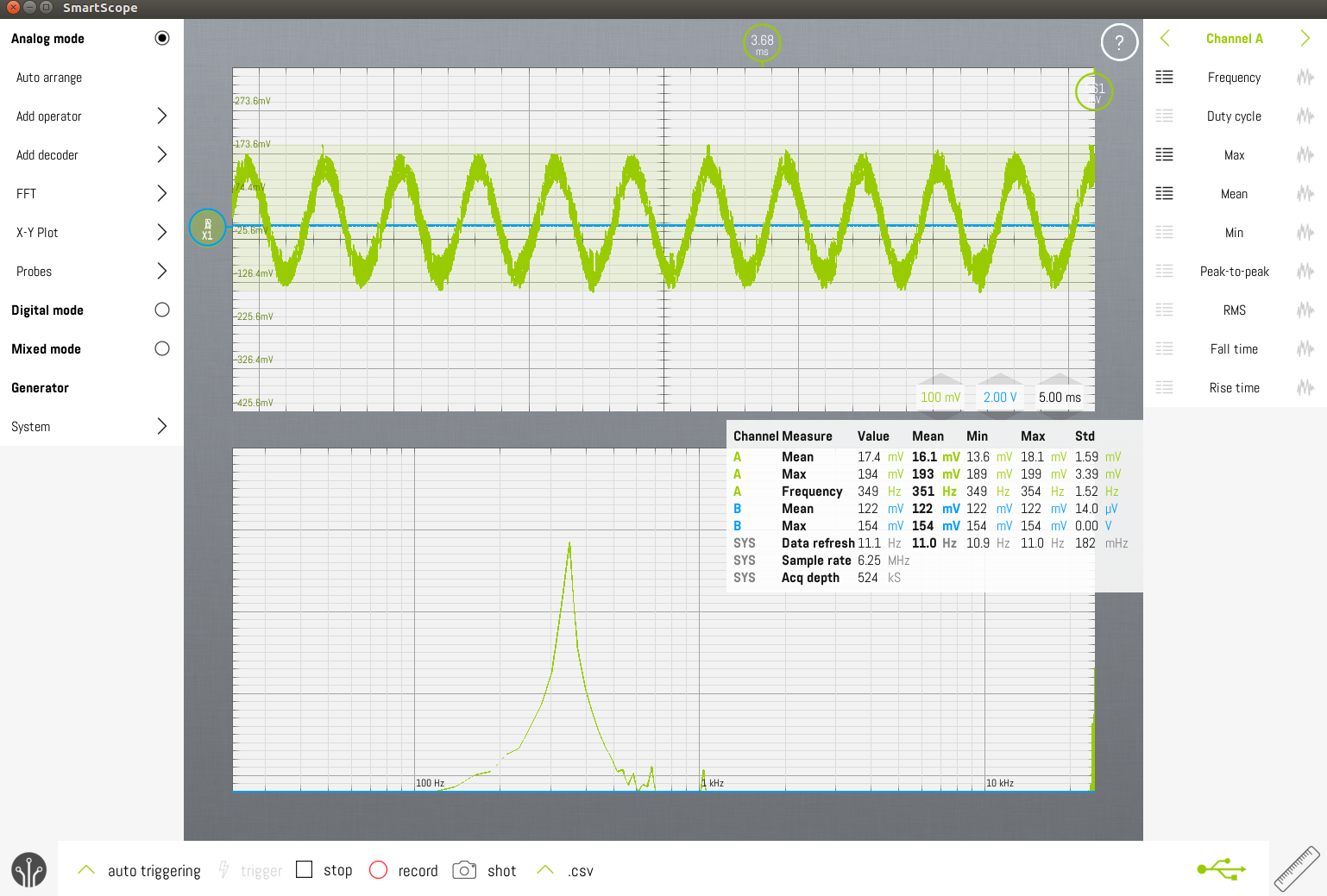

I took a look at the waveform of the lowest and second lowest notes on the keyboard with an oscilloscope. The lowest note had a frequency of 350Hz, and the second lowest was 370Hz. This meant that I needed to be able to resolve a difference of 20hz, and at 96kHz this meant that I would need 4800 samples (96kHz/20Hz = 4800) to be guaranteed to be able to differentiate between these two notes. SDL only takes samples in powers of 2, so I tested taking 4096 samples at a time and I was now able to resolve these two notes. Since the distance between notes increases as you go up the scale I knew I would probably be able tell the difference between notes as they increased.

The program that talks to my DSO analysing the lowest note

Now no notes were triggering the same positions in the FFT, but there were some new problems. Sometimes some notes triggered two positions close together in the transform, which is what you would expect if the note fell in right between two frequencies in the transform. However every single note seemed to trigger a value in the transform in the very high frequencies, and this position seemed to get lower the higher the frequency of the note being played. They also were all so high, that they were in the range inaudible to humans. I assumed that these might be coming from the keyboard itself, so I decided to include them in the positions that would be used to detect if notes were currently being played or not.

After this it was a simple matter of writing a program that checked to see what notes were currently being played, compare to the values from the last time that check was done and send a message if a note had started to stopped being played.

Hooking it up to a MIDI program

Once I could distinguish between the notes all that was needed was to output the midi messages and route them to a midi program. For this I used the RtMidi library. From this point I was able to use the aconnect command to send the commands from the output port created by RtMidi and send them to the VMPK program. Here is a video of it all in action.

Further work

One obvious thing to do with this, if I end up doing this for multiple different keyboards, would be to put all of the different values for the keyboard into a config file. Right now all of the information specific to the PSS-11 is in notes.h. Wrapping this up into a bit of JSON or some other configuration file format and reading this in at runtime would be an absolutely necessary step to make this more user friendly.

Although this will work for a while, I think I still want to do a hardware conversion on a keyboard. Although this is good for playing some notes into a DAW I also want some sliders and knobs as well, and this program will not give me that. There is only so much you can do before having to get out the soldering iron and hack some electronics.